Statistical improvements in functional magnetic resonance imaging analyses produced by censoring high-motion data points

Josh Siegel, Jonathan Power, Joe Dubis, Alecia Vogel, Jessica Church, Brad Schlaggar, Steve Petersen

Human Brain Mapping 2014 May; 35(5):1981-96

PubMed link

Figures (ppt)

This paper is older than it looks. I think we started this paper in 2011 and maybe first submitted it in 2012. At the time Josh was a research assistant in lab, having finished his honors thesis with Steve but not yet applied to medical school (where he is now an MD/PhD student at WashU working with Maurizio Corbetta). Josh was fishing around for a project of his own in addition to his regular work, and we had just implemented censoring ("scrubbing") in the resting state analyses and it was obviously helpful in removing motion artifact. So we decided to see how much scrubbing impacted task analyses.

It's a simple article. We take cohorts of children, adolescents, and adults, and examine the subject-level (level I) and group-level (level II) effects modeled before and after excluding timepoints contaminated by motion (exclusion is by adding delta functions to the "bad" timepoints in the general linear model). Across the board, statistical power is boosted, in proportion to how much motion was in the dataset. So improvements are greatest for children, modest for adolescents, and slight for adults. The improvements mainly come from reducing the variance of the estimated timeseries across subjects - the average waveforms of the conditions are similar before and after censoring, but the timeseries are more uniform across subjects when motion-contaminated volumes are excluded.

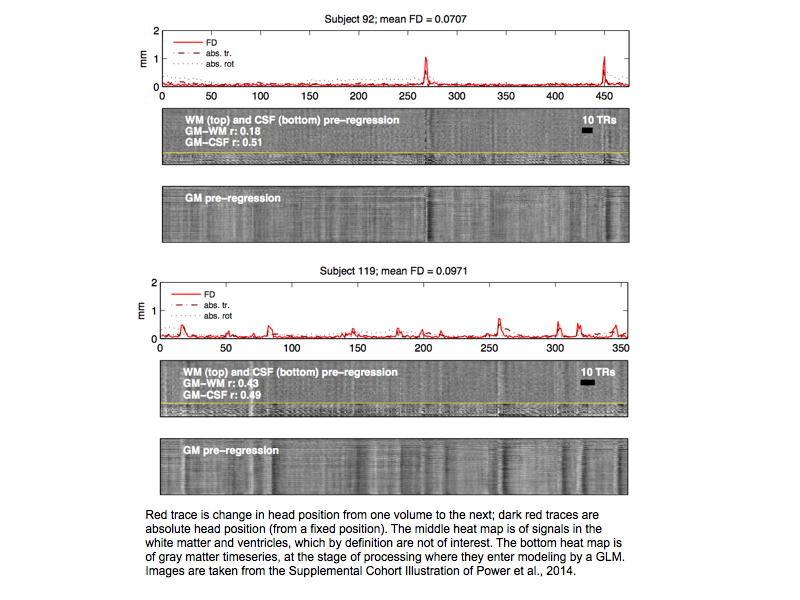

The reason for this improvement is pretty obvious if you look at the timeseries (see the images below). The signal during motion is often considerably disrupted, which screws with the fits of the timeseries. The spikes in the bright red traces are when the head moves, and there are also darker red traces showing how much the head has moved, in absolute terms, from an origin position. The gray-scale plots show the signals at thousands of individual voxels over the scan. The middle panels are voxels in white matter (above the yellow line) and ventricles (below the yellow line), signals that are by definition not of interest. The bottom panels show gray matter timeseries, the data that are fit by a GLM. The GM-WM and GM-CSF numbers are the correlations between the mean timeseries in gray matter, white matter, and ventricular compartments.

Motion-related variance is large-amplitude and stands out from other patterns in the data. The scans being shown are task-free, but task scans have a similar appearance, and in task-free scans there are no doubts about whether a signal is "supposed" to be elicited due to the task environment. During fitting by a GLM, outlying values during motion can pull the signal fitted to a set of trials away from where it should be. Excluding those outlying values sidesteps those problems. Similar benefits would result from interpolation to reduce the "outlyingness" of the bad timepoints (though the data really aren't "there" anymore).

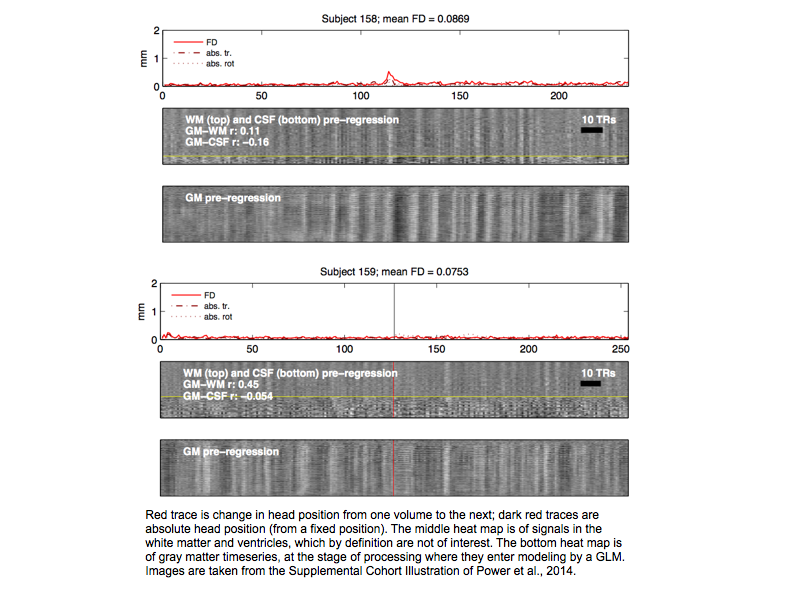

I want to emphasize that there is also plenty of large-amplitude artifactual variance that isn't motion-related. For example, here are two more subjects where you can see that some other factor is altering the signal in artifactual ways that would interfere with the GLM.

Many task studies do little to denoise the data prior to the level I analyses (fitting the timeseries to a GLM). As these images show, there is probably much statistical power to be gained by more aggressive denoising prior to or during the fitting process. Kendrick Kay had an interesting paper on this topic recently, and I know that the folks developing the multi-echo techniques are interested in this topic as well. Nuisance regressions of white matter or ventricular signals may be helpful (you can see the correspondence of such signals with gray matter signals in the figures above). The censoring procedure used in this study can be helpful for volumes that are beyond rescue by other denoising techniques.

Within the Petersen/Schlaggar lab, we tried adding censoring to task analyses in several datasets. In adults, generally, very little effect is produced, mainly because the adults move so little. But in pediatric datasets, where there is considerable motion, censoring can have appreciable effects. The effects of censoring can be consequential if, for example, without denoising you find activation in adults (decent statistical power to begin with due to relatively little artifact) but not in children (where artifact perhaps disrupts fits, spuriously decreasing power). With censoring (or other improved denoising), you could boost power in the children and perhaps reveal effects of comparable or at least more similar magnitude to that found in adults.

JDP 2/2/15